One of the earliest card sorts I ran was unnecessarily complex, involving over 100 cards with around 80 participants. Yes, what a sucker for punishment! We had started off with a simple research goal and unwittingly turned it into a monster. We wanted to find out everything. Part of the problem, in this case, was a misunderstanding on the part of the client.

Client: “So it’s like a survey? So we should have a lot of people, right? And it’ll tell us how we should structure our Web site?”

Sam: “Well, actually, no. It will help us identify patterns in how users expect to find content, but it won’t give us a complete structure.”

Client: “But it’s like a survey, right? So we should get as many people as we can and make sure we represent all our market segments.”

Sam: “Well, it depends...” (And now seriously regretting I had mentioned the word survey at all!)

This story illustrates two things:

- Card sorting can easily get out of control by trying to be all things to all people.

- Clients and stakeholders might mistakenly assume card sorting can produce a perfect answer.

The reality is that many people—and sometimes even ourselves if we were honest—expect card sorting to more or less create our information architecture. You don’t have an information architecture or the one you currently have is bad? Why that’s an easy problem to solve—just run a card sort.

Whoa! Hold up!

According to Lou Rosenfeld and Peter Morville’s information architecture bible, Information Architecture for the World Wide Web: Designing Large-Scale Web Sites—also known as the Polar Bear book—an information architecture comprises a navigation system, a classification system, a labeling system, and a search system. Card sorting certainly can provide input into an organization system—what content goes together—and a labeling system—what to call things—but it’s got very little to do with a navigation system or a search system.

More importantly, I’ve realized that card sorting can be all about timing.

Card sorting is most often employed for redesigns. In a redesign, it’s often the case that there is a huge amount of information that’s getting out of hand, there’s layer upon layer of internal politics, the technology is outdated and heavily constrained, there’s no central owner of the information, and worst of all, the business does not really have a clear Web and information strategy. The focus is typically on stuff the organization needs to deal with, not on investing in an asset. There’s no way a simple little card sort—or even a massively complex one—can fix all that.

Timing when you do a card sort is as important as how you actually do the card sort. Card sorting is often most useful once you’ve done some homework to find out about your users and understand your content. This knowledge provides a base from which you can create or improve your information architecture. Mistime your card sort and you run the risk of raising expectations that card sorting cannot possibly meet.

Messy Is Okay

I have a love/hate relationship with card sorting. Card sorting feels like it should give us all the answers, but it doesn’t. We have all this quantitative data—so why don’t we have clear-cut methods of analysis, and why in the world can’t we get clear cut answers?

I’m no statistician, and neither are most usability professionals. So, frankly, I avoid statistical analysis methods that I cannot explain to others. But this means I don’t tend to get clear-cut answers that result in a site map—such as dendrograms generate. I’m okay with that. In fact, I’m increasingly confident that a non-statistical approach might even be better.

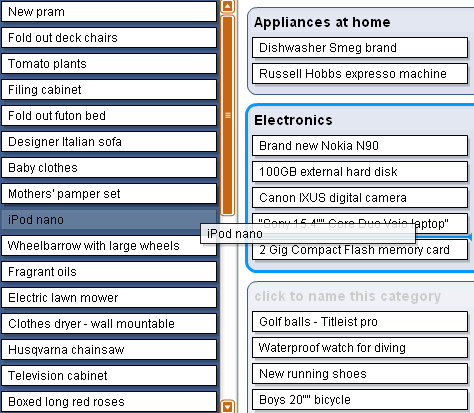

In most of the card sorts I’ve facilitated, the best way of doing analysis was to eyeball the data. I’ve learned there is a lot of value in taking this approach. There are a number of tools to help you eyeball your data, and Donna Maurer’s spreadsheet template in particular is quite helpful.

I’ve accepted the fact that card sort analysis—much like usability test analysis—is often messy and subjective. It’s part science, but mostly art. As with many aspects of our work, there isn’t necessarily a single correct, quantitative answer, but rather a number of different qualitative answers—all of which could be correct. Our job is to use our experience and our understanding of people to make judgment calls.

Analysis is a barrier that prevents many people from running card sorts. My suggestion is to leave your obsession with getting the correct answer at the door and accept that analysis gets messy. Not only does this set the right expectations about what card sorting can deliver, it might also open your eyes to other insights you would otherwise miss.