This month, I'd like to talk about why we should care about designing for users and their devices. As designers, how can we use information about device hardware and the ways users choose to employ it to make our designs better and to make our users, clients, and customers happier and more productive.

While users interact with their mobile devices in many ways, I’d like to focus on some of the areas where I see the greatest misunderstandings and the most problems and provide some simple guidelines to help UX designers get to a more complete understanding of device hardware.

Respect Orientation Choices

I often travel and work away from home. But I don’t like to miss my TV shows, so I get around the current limitations of digital distribution by watching shows that I’ve recorded at home via the DISH network using the DISH Remote Access app. Getting data from my personal video recorder (PVR) at home onto the network involves a little hardware setup, but this requires only simple, consumer-grade technology experience. Thus, when I am away from home, I can just open the DISH Remote Access app on my mobile phone or tablet, select a show, and watch it.

Unfortunately, most of the DISH Remote Access app works only in portrait mode, while the video player works only in landscape mode, forcing me to view the selection and viewer screens using a device in different orientations. So my choices are either to pick up the device and rotate it a lot, as shown in Figure 1, or simply to leave it on its stand and turn my head sideways from time to time.

You can certainly encourage the use of specific orientations for certain tasks such as watching video. But you should almost never force anyone to use a specific device orientation. Respect a user’s personal preference, and provide the most complete functionality possible however they choose to use a device.

You may recall from last month’s column that perhaps half of Android phones have a hardware keyboard. A lot of these keyboards slide out from beneath the screen, making a phone a landscape-orientation device whenever the keyboard is in use. That means, when the keyboard is out, an app should almost always ignore orientation sensors and assume a user wants to enter content, with the phone oriented in the correct way for keyboard use.

Design for All Orientations

While you can use responsive design principles to make sure content stretches to fit the available space on a screen, you will be much better off and have more satisfied users if you customize an app’s user experience for each orientation.

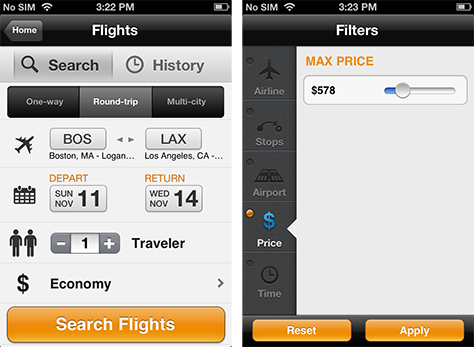

For example, if your product has a useful hierarchy of lists or displays multiple types or categories of useful information, one simple approach is to display either one or two columns of data depending on orientation, as in the Plume app for Android shown in Figure 2. There are other ways in which you can take advantage of additional space or, depending on the orientation of a device, cleanly use less space. For instance, your lists can provide varying amounts of information per line, by either adding columns or simply expanding the area for longer descriptions and not truncating content as much.

Never just pad out extra space with unrelated items or more advertising. Users will recognize that you’ve put a small screen interface on their tablet. And never leave extra whitespace. While you might be able to persuade yourself that a user interface is clean and open, users will want to use all of the available space to view more information. They may even perceive that extra space as something being broken.

Also make sure all functions work correctly across orientations. Make sure a user interface doesn’t change, forget selection states, clear user-entered data, or scroll to a different place on a page during orientation changes. In just the last few weeks, I’ve seen all of these misbehaviors, so make no assumptions about development teams being on the same page as you regarding the proper implementation of an app for different device orientations. Specify behaviors clearly, then test them carefully.

Understand Focus

Looking at a classic scroll-and-select user interface—on a desktop computer, mobile phone, or even a game console or TV—is the best way to understand how focus works. When navigating a list, a user uses a directional pad to scroll through the items in the list. It’s easy to tell which item has focus because it is highlighted or otherwise clearly indicated. This is the in-focus item. [2]

When designing user interfaces and interactions, I find it helpful to declare when something is in focus. One key reason I push for this is that there are a lot of devices with hardware keyboards and 5-way directional pads. Even iOS supports add-on keyboards. Design and build apps properly, so users can scroll through the items in a list using the arrow keys on their keyboards.

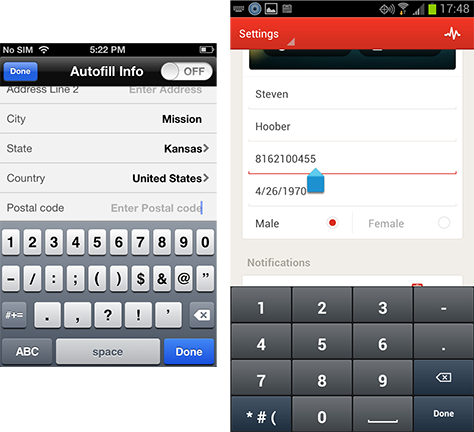

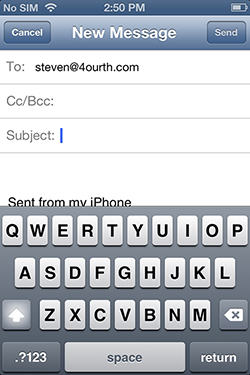

Focus conditions are still critically relevant for pure touch interfaces. Think of the many times you’ve tapped an item only to find that it’s not a link or button, but a field, as shown in Figure 3. There are many conditions like this where it’s necessary to select an item just to indicate focus. Form fields are a simple case, but you’ve probably designed many conditions that on a desktop Web site would be a hover state. For example, a user might tap an item to reveal a bit more information or display a menu over the rest of the page.

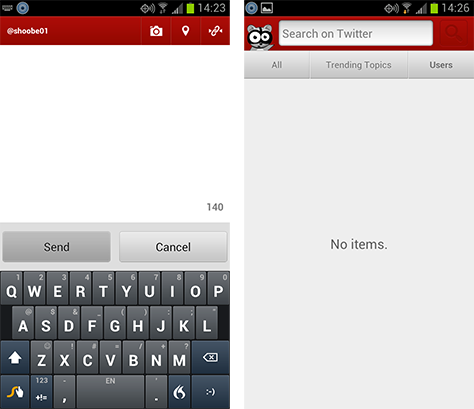

Still, you might ask, why does this matter for touch? If an operating system already supports this behavior, you might think all you need to do is provide form fields. Well, have you considered what granting focus can or should do? Seesmic, a Twitter client for Android, demonstrates both of these cases, as illustrated in Figure 4.

In this user interface, tapping the Compose button displays a full-page text area, in which a user can type whatever he wants. Most usefully, the text area is in focus by default; so on all touch devices, the keyboard immediately appears on the screen, and a user can get right to typing. On the other hand, tapping the Search button usually displays an autocomplete list of favorite searches—though, the first time, it is empty—and a search box. Nothing is apparently in focus, and the user must deliberately tap the search box to start typing a new search string.

This user interface is almost a copy of the classic scroll-and-select address book layout on a phone, in which lists appear with search boxes. But the search box should always have focus by default. On feature phones, a user can scroll or type with equal facility and, using this Twitter client, could just as easily tap words to search or select an old search string, if available. This behavior would both expose the function more quickly and prevent a user from having to tap something that is both far up the screen and near many other functions.