For example, it’s not enough to be a software developer yourself and believe that another developer would love to use a coding automation tool that would meet your needs. The market might not want it. Or perhaps organizations wouldn’t pay for that kind of tool. Savvy investors will point that out.

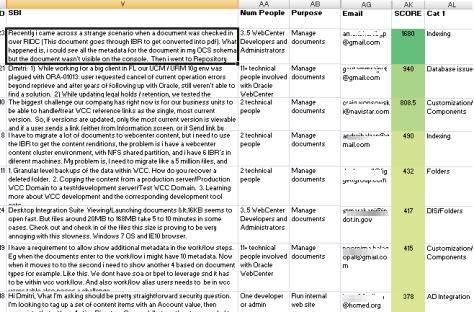

So how do you find out what your market really wants? If you’re working in a large enterprise and can’t talk to most of your users, how can you find out what users want? You might answer that you’ll conduct surveys. But do they really work?

The Problem with Surveys

Let’s face it, most surveys don’t really give us definitive results. Even when it feels like the results are giving us a good indication, we can’t really know for sure. Why? Because most surveys are built on assumptions—lots of assumptions.

Smart researchers have figured out that many folks just love to make us happy, so they’ll tell us exactly what they think we want to hear. Questions such as Are you satisfied with your current accounting system? would most likely get you a Yes, even if the system were a nightmare to use. Users might compare it to their supplier’s system that uses paper, or they could give you a Yes just so you’ll stop bothering them with questionnaires.

So smart researchers use Likert scales to make survey answers more accurate, asking questions such as: Your current accounting system is: Very useful, somewhat useful, not really useful, or not useful at all? Guess where most of the answers will be. They’ll be split between very useful and somewhat useful. So, to get more accurate data, smart researchers try using quantitative scales and ask questions such as How many times a day do you use your accounting system? and How long does it take to load on average?

But does that work? What if they were wrong to focus on the accounting system? What if, no matter how poor that system might be, the problem really lies in missing data—perhaps from major suppliers or subcontractors? What if that’s the real reason behind the screwed-up reports and all the additional time bookkeepers must spend working on the system, driving their time spent way above the industry average?

How would you figure that out with a survey? You can’t—unless you ask open-ended questions such as How would you make your Accounting Department more efficient? But that won’t work either. Why?

Why Can’t You Just Ask Users What They Want?

I’ll bet you know the answer: they won’t be able to tell you. People didn’t ask Henry Ford to build a car. They wanted faster carriage horses. They had no idea what a gasoline engine was and ridiculed him when they saw him driving his horseless carriage.

Observation doesn’t always work either! Watching people driving their carriages would have been of little value to Henry Ford—no matter how many people he observed or how much time he spent watching them.

Nobody asked Steve Jobs for an iPod either. They had no idea that it was even possible to store a thousand songs on a mini hard drive in an MP3 player that they could take with them anywhere. Sure, once Apple created the iPod, people were amazed and wanted it, but Apple had to conduct many interviews to find out that market existed.

Interviews do work, but only if you ask the right kinds of questions. Interviews let you collect accurate data if you ask users about their problems first. So questions such as What do you hate most about your current MP3 player? would have worked. Apple would have learned about short battery life and having to load new songs every couple of days. But asking What would you like to see in the perfect MP3 player? would not have worked.

However, you need to conduct a lot of interviews to go after a new market—or even if you’re just trying to improve a business process in a large corporation. You’ll need to talk to a lot of people before you’ll start seeing different people describing the same types of problems over and over again—revealing the repeated patterns. And even when you do, how would you pick the problems people feel most strongly about that you’d really need to resolve?

Even if you could determine what problems they really need to solve, you might find that people wouldn’t be willing to pay for a product that solves them! Instead, they’d pay for a solution to the problem they want to solve—and that might be a very different problem.