Some surveys are similar. Your response may be required by law, and lack of response may be punished by a fine or worse.

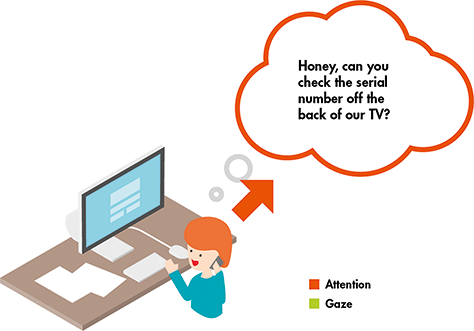

But in some ways, even “mandatory” forms and surveys are optional. When faced with a challenging form, the user may delay, abandon, or incur the cost of asking someone else, such as an accountant or family member, to tackle the form. All of these options increase the burden for the individual and pose potential problems for data quality. As a result, low response rates are now threatening the viability of the ordinary everyday survey, historically a powerful tool for social, academic, and market research. And costs increase—for the user and for the organization that wants the user’s data.

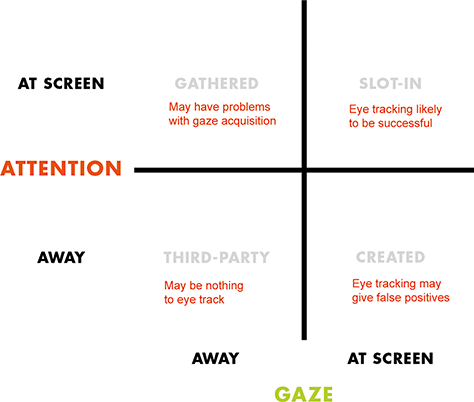

In this chapter, we explore what eye tracking can tell us about the user experience of forms and surveys. We then discuss when eye tracking is appropriate and when it can be misleading.

Our conclusions are:

- For simple forms and straightforward surveys, eye tracking can guide your design decisions.

- For more complex examples, consider your eye-tracking data only in light of data from your other usability findings and cognitive interviews.

Forms and Surveys Have a Lot in Common

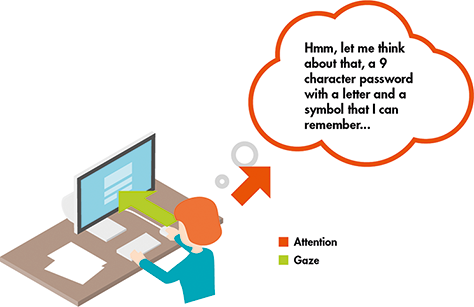

There are different types of forms, varying in the amount and type of information they ask for. For example, in some, users need merely to enter their username and password. However, in others, they need to enter quite a bit more. The amount of information and the cognitive resources required to complete forms can greatly impact eye-tracking data.

In this chapter, we focus on the form or survey itself (a sequence of questions and places for users to answer) rather than on the entire process of the users’ transactions or the data collection.

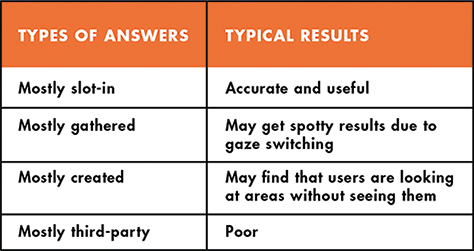

In this narrow sense, what is the difference between a form and a survey? Not very much. Both ask questions and provide ways for users to answer those questions. Broadly, we call something a “survey” if the responses are optional and will be used in aggregate, and a “form” if the responses are compulsory and will be used individually. But there can be overlaps. For example, sometimes a survey begins with a form (Figure 5.1).

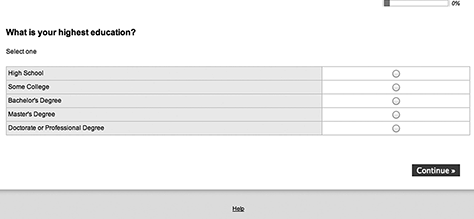

And sometimes a survey asks questions that will be used individually, or are compulsory (Figure 5.2).

We can talk about the two together in this chapter because whether it is a form or a survey, users interact with it in similar ways.

Some Examples of What We Can Learn from Eye Tracking Forms and Surveys

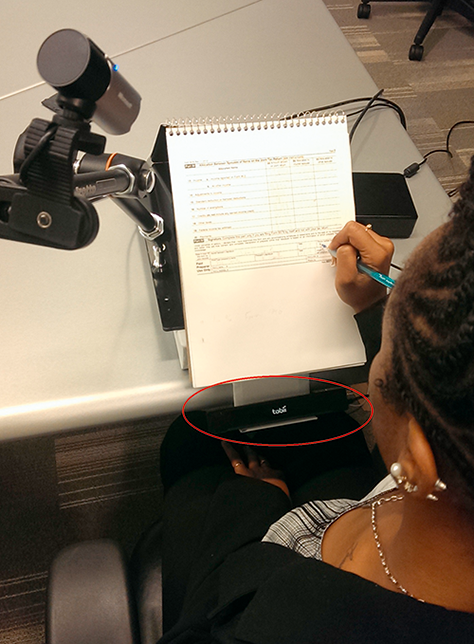

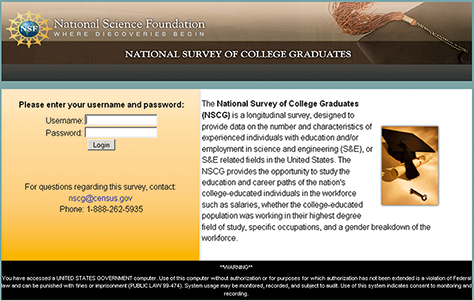

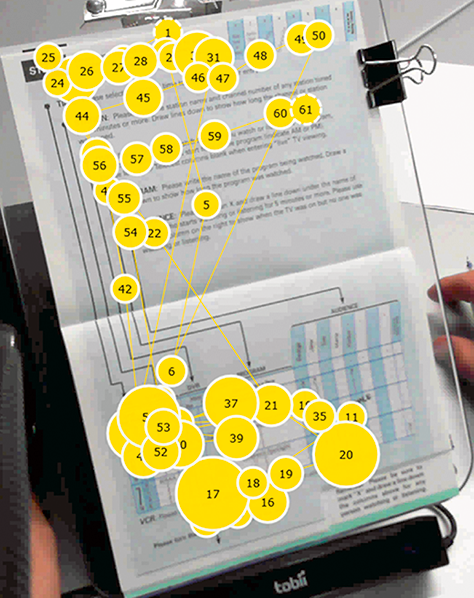

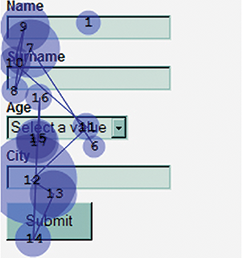

In many ways, eye tracking a form or survey is just like eye tracking anything else. Today we are even able to successfully obtain eye-tracking data from paper by mounting it to a clipboard, as in Figure 5.3. However, the different types of questions and layouts of questions and response options can play a big role in the quality of eye-tracking data. Let’s look at what we can learn about forms and surveys from eye tracking.

People Read Pages with Questions on Them Differently from Other Pages

You are probably familiar with the idea that “people read Web pages in an F-shaped pattern” (discussed further in Chapter 7). That is, they read the first few sentences, then the first few words of each line, and then a couple of sentences further down the page (perhaps in a new paragraph), and then the first few words of each line below that.

That F-shaped pattern may hold true for some content-heavy pages, but eye tracking reveals that people react entirely differently to pages that are full of questions and answer spaces. These differences are neatly revealed by the contrasting eye-tracking patterns in Figure 5.3.

When testing pages with questions on them, we consistently find that users avoid looking at the instructions. Instead, they focus on the questions.

In Figure 5.4, we see a typical example: gaze plots reveal that most people quickly looked for the “slots” to put their information in so they could move rapidly to their goal of finishing.

Do people ever read instructions on forms or surveys? Not very often—unless they have a problem with a question. Then they might. Or they might bail out. Figure 5.5 shows a typical pattern for two pages full of instructions; the participant quickly scanned then turned the page to get to the questions.

If your instructions are short, helpful, and placed only where needed, they might keep your users from giving up. If the questions themselves are too long, users may react to them as instructions and skip directly to the response options.

Eye tracking allowed us to identify some respondent behaviors that did not conform to the normative model of survey response. Whereas the model expects a respondent to read the question and then select a response option, we collected eye-tracking data that showed participants skipping questions and going directly to the response options. One thing we learned was that people take any shortcuts possible to finish a questionnaire, even in a laboratory setting. They have lives to live! If they can guess what the question was asking by looking at the response options, they will skip the question. Of course, their guess may not be right, and a design intervention may be needed to ensure that they have read the question. Thus, the results of eye tracking can inform survey design in many ways.—Betty Murphy, formerly Principal Researcher, Human Factors and Usability Group, U.S. Census Bureau (currently Senior Human Factors Researcher, Human Solutions, Inc.)

These eye-tracking results lead to three important guidelines about instructions for forms and surveys:

- Write your instructions in plain language.

- Cut instructions that users do not need.

- Place instructions where users need them.

Write Your Instructions in Plain Language

Many instructions are written by technical specialists who concentrate on the subject matter, not clear writing. It is up to the user experience professional to get the instructions into plain language.

For example, watch the jargon (Redish, 2012). The word “cookie” may be familiar to your users, but are they thinking about the same type of cookie (Figure 5.6)?

Cut Instructions That Users Do Not Need

Once users have clicked on an online form or survey, they do not want instructions on how to fill in the form. They have passed that point.

Limit yourself to the briefest of statements about what users can achieve by filling in the form. Provide a link back to additional information if you like.

Users do not want to be told that a form or survey will be “easy and quick,” and they do not want claims about how long the form will take.

- If the form is genuinely easy, the users can just get on with it.

- If it is not, you have undermined the users’ confidence straight away.

- Exception: if it is going to be an exceptionally lengthy task, perhaps several hours, then it might be kind to warn users about that. (And definitely, explain to them about the wonderful save-and-resume features you have implemented.)

Place Instructions Where Users Need Them

You may need some instructions on your forms and surveys. Some can actually be quite helpful, such as:

- A good title that indicates what the form is for

- A list of anything that users might have to gather to answer the questions

- Information on how to get help

- A thank-you message that says what will happen next.

The title and list of things to gather need to go at the beginning, the information about help in the middle, and the thank-you message at the end.

People Look for Buttons Near the Response Boxes

There is a long-running discussion in many organizations about whether the “OK” or “Next” button— properly, the primary action button—should go to the left or right of the “Cancel,” “Back,” or “Previous” buttons—properly, the secondary action buttons.

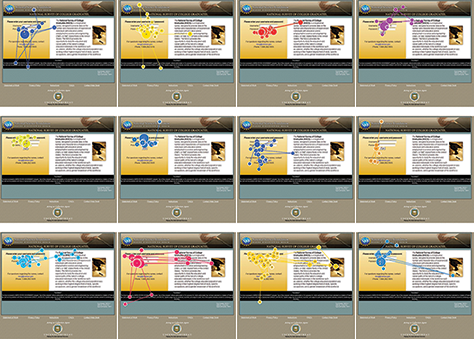

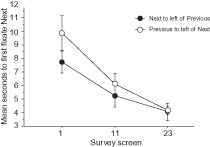

Eye tracking reveals that users learn where to look for the primary navigation button quite quickly, no matter where it is placed, as in Figure 5.7 (Romano Bergstrom et al., under review). By the time participants reached screen 23, the layout of the buttons no longer affected them.

But they do not like it when the Next button is to the left of the Previous button.

In a typical example where participants were asked to complete a survey with ‘Next’ to the left of ‘Previous’, many participants said that it was counterintuitive to have ‘Previous’ on the right. One participant said that she disliked the “buttons being flipped” although she liked the look and size of the buttons. Another participant said that having ‘Next’ on the left “really irritated” him, and another said that the order of the buttons was “opposite of what most people would design.” In contrast, for the version with ‘Previous’ to the left of ‘Next’, no one explicitly claimed that the location of the buttons was problematic. One participant said that the buttons looked “pretty standard, like what you would typically see on Web sites.” Another said the location was “logical.”—Romano and Chen, 2011.

Eye tracking reveals that the important thing to users is not where the buttons are placed relative to each other, it is where the buttons are placed relative to the fields (Jarrett, 2012). Users hunt for their primary action button when they believe they have finished the entries for that page of the form or survey, and they generally look for it first immediately under the entry they have just filled in, as in the schematic in Figure 5.8.

Place Navigation Buttons Near the Entry Boxes

To ensure that users can find your primary action button easily (and preferably before they get to page 23 of your form or survey), place it near the left-hand edge of the column of entry boxes. Then design your secondary action buttons so that they are clearly less visually obvious than the primary button, and placed sensibly, in particular, with Previous toward the left edge of the page.

People Fill in Forms More Quickly if the Labels Are Near the Fields

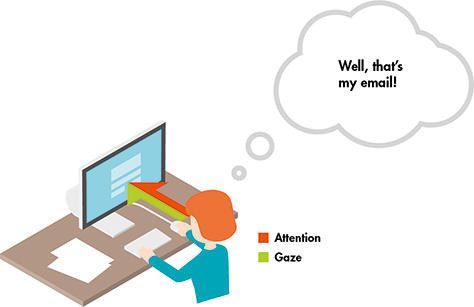

The schematic in Figure 5.8 also illustrates the typical reading pattern for a form page:

- Look for the next place to put an answer (a “field), then

- Look for the question that goes with it (the “label”).

Just as with the placement of the primary action buttons, there is a long-running discussion over where the labels should go relative to the fields. Or at least this topic was discussed greatly until Matteo Penzo (2006) published an eye-tracking study that showed that users fill in forms more quickly if the labels are near the fields.

Penzo claimed that forms are filled in more quickly if the labels are above the boxes, as shown in Figure 5.9. A subsequent study (Das et al., 2008) found no difference in speed of completion, even in a simple form, but there appears to be an advantage for users if the labels are easy to associate with the fields.

For example, if the labels are too far away, as in Figure 5.10, then users’ eyes have to work harder to bridge the gap, and they may associate the wrong label with the field.

Place the Label Near the Entry Field

Help users by putting the labels near the fields and making sure that each label is unambiguously associated with the correct field. Whether you decide to place the labels above or below the entry fields, make it easy on the user by being consistent.

Users Get Confused about Whether They Are Supposed to Write Over Existing Text

If you were thinking of “helpfully” including a hint—or even worse, the label—in an entry field, think again. When there is text in the entry field, users get confused about whether they are supposed to write or type over the existing text.

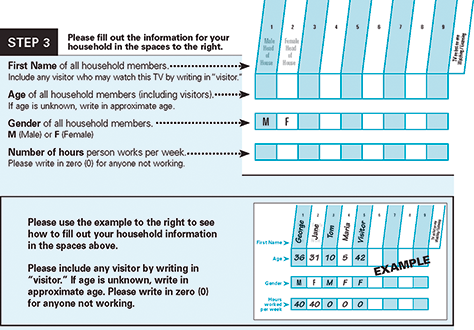

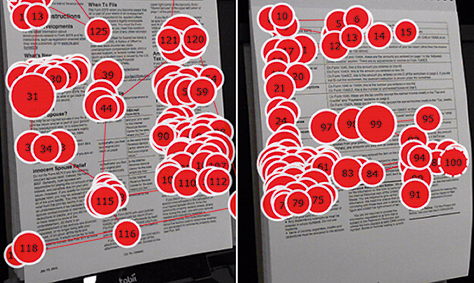

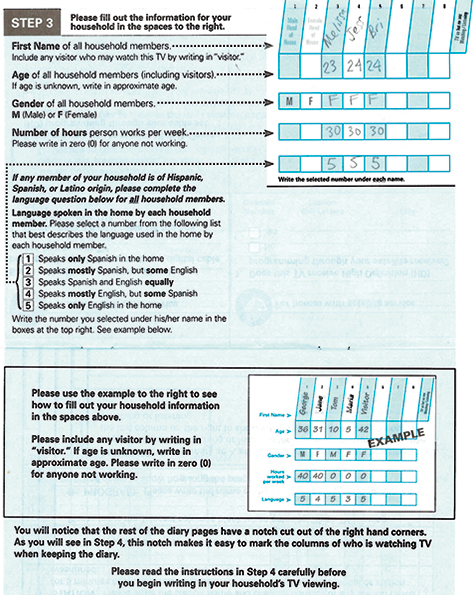

For example, in the form in Figure 5.11, participants consistently skipped over the first two entries and wrote in the names of household members starting in the third entry box, as shown. They did this even though there was an example at the bottom showing them how to use the form. They said things like: “If you want someone to write something in, you shouldn’t have writing in the box,” “I’m not sure if I’m supposed to write in over the lettering,” and “Where am I supposed to write it? On top of this?”

We have many times observed the same behavior in Web and electronic forms and surveys (Jarrett, 2010b).

Do Not Put Any Text Inside the Response Boxes

Do not put anything where users are meant to type or write. Leave the insides of boxes clear of labels, hints, and any other types of clutter so it is clear where they are supposed to write.

Users May Miss Error Messages That Are Too Far from the Error

The best error message is one that never happens, because your questions are so clear and easy to answer that users never make any mistakes. Realistically, some problems will occur: miskeying, misunderstanding, or failing to read part of a question.

When an error occurs, it is important to make sure that an appropriate message appears where users will see it and that it is easy to find the problematic part of the form.

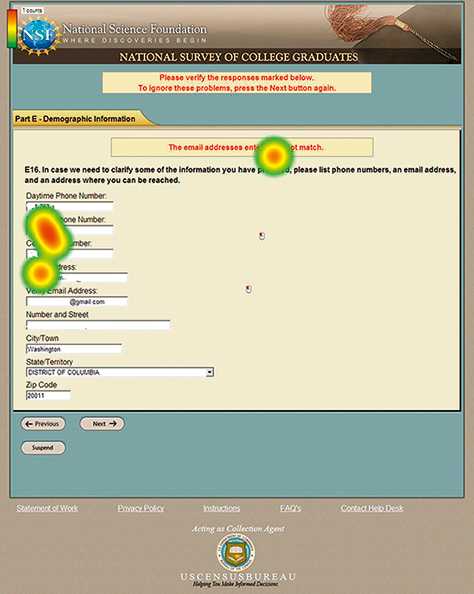

Romano and Chen (2011) tested a survey that had two “overall” error messages: one at the top of the page, and one at the top of the problematic question. The screenshot in Figure 5.12 illustrates the problem: users expect a single overview message, not one that is split into two places. In fact, they rarely or never saw the uppermost part of the message, which explained that the question could be skipped. Although correcting the problem is preferable, skipping the question would be better than dropping out of the survey altogether, and users who did not see the upper message might simply drop out.

We also often see users have difficulties when the error message is far away from the main part of the survey, as shown in Figure 5.13. This causes the respondent to turn his/her attention away from the main survey to read the error message then look back to the survey to figure out where the error is.

Put Error Messages Where Users Will See Them

Make it easy on your users. Place the error message near the error so the user does not have to figure out what and where it is. Be sure to phrase the messages in a positive, helpful manner that explains how to fix the errors.

Our recommendations are:

- Put a helpful message next to each field that is wrong.

- If there is any risk that the problematic fields might not be visible when the user views the top of the page, then include an overall message that explains what the problem(s) are (and make sure it deals with all of them).

For more information about what error messages should say, see “Avoid Being Embarrassed by Your Error Messages.”—Jarrett, 2010a

Double-Banked Lists of Response Options Appear Shorter

There is a long-running discussion among researchers about what is best for a long list of response options:

- A long scrolling list or

- Double-banked (i.e., split in half and displayed side by side)

A benefit of a long scrolling list is that the items visually appear to belong to one group; however, if the list is too long, users will have to scroll up and down to see the complete list, and they may forget items at the top of the list when they read items at the bottom of the list.

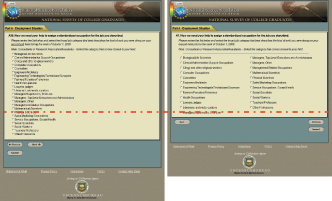

With double-banked lists, there is potentially no scrolling, users may see all options at once (if the list is not too long), and the list may appear shorter. But users may not realize that the right-hand half of the list relates to the question.

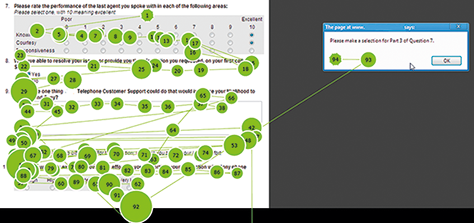

Romano and Chen (2011) tested two versions of a survey: one had a long scrolling list of response options (shown on the left in Figure 5.14), and one had a double-banked list (shown on right). Participants tended to look at the second half of the list quicker and more often when double banked. Most participants reported that they preferred double-banked lists.

Avoid Long Lists of Response Options

While eye-tracking data on this topic is still limited, double-banked lists can appear shorter, and shorter forms often seem more appealing to users. If you must present a long list of options, a double-banked display can help, provided the columns are not too far apart so that the two lists are clearly part of the same set of options.

But to be clear: we are talking about a double-banked set of response options within a single question. This is definitely not a recommendation to create forms that have two columns of questions, which is a clearly bad idea because users often fail to notice the right-hand column (e.g., Appleseed, 2011).

However, the challenge of the long list of options neatly illustrates the limitations of a purely visual approach to form and survey design. Better solutions to solve the problem include:

- Breaking long lists into smaller questions or a series of yes/no questions

- Running a pilot test, then reducing the list of options to the ones that people actually choose

- Running a pilot test, then reducing the list options to a small selection of the most popular ones, with a “show me more” option that allows users to choose from a longer list if necessary.